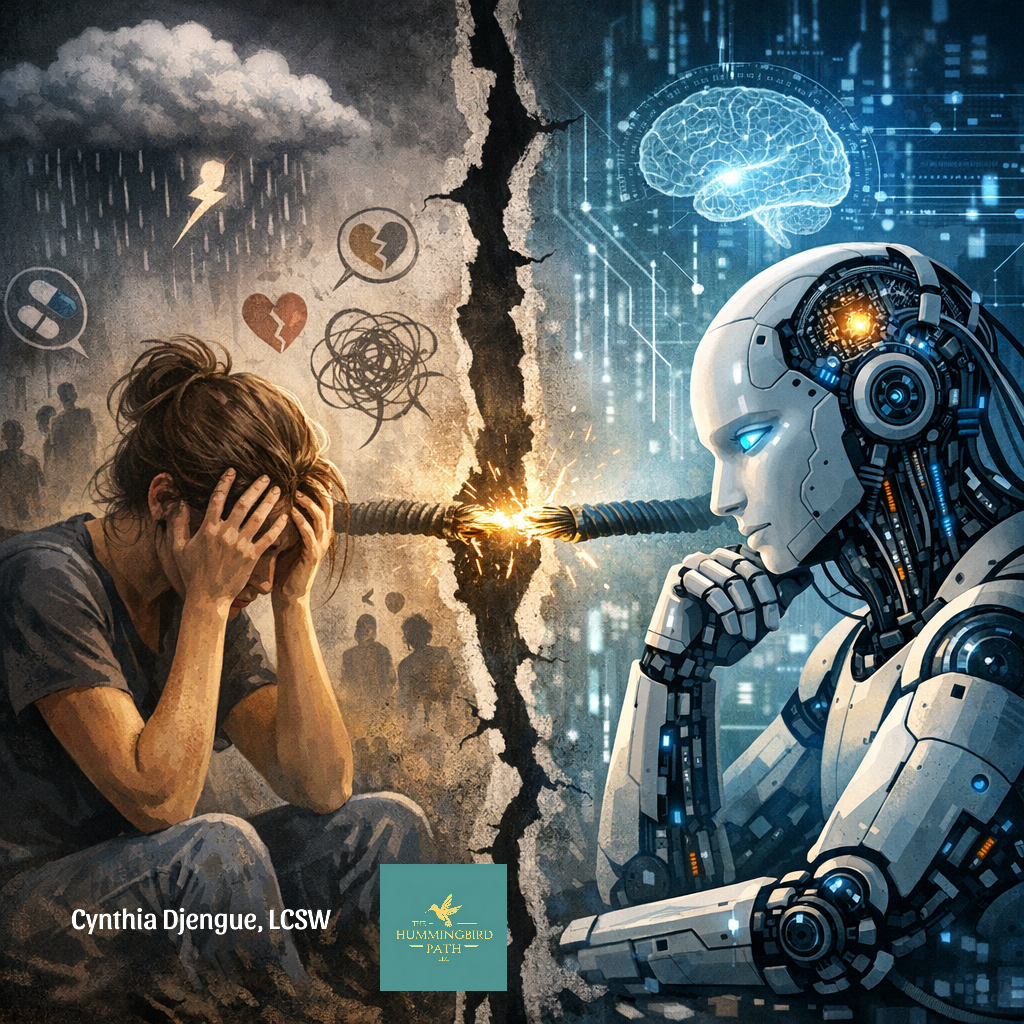

When Tragedy and Technology Intersect: Reflections on Therapist Safety, Culture, and the Rise of AI in Mental Health

Introduction

You know when something big happens in your industry, your city, or your neighborhood, and you feel reflective,cperhaps emotional, and maybe even afraid? Many therapists are feeling that way right now after hearing that a colleague in our field, Rebecca White, was tragically killed - stabbed by a client she had dedicated herself to helping. It’s every therapist’s worst nightmare. We enter this profession knowing that vulnerability is part of the work: a client may become angry, leave a critical review, misinterpret an interaction, or, in rare but devastating cases, react violently to boundaries, attempts at behavior change, or the painful process of healing itself. And those of us who work with clients who have trauma histories, criminal backgrounds, or patterns of violence shoulder an even greater risk. These clinicians deserve our deepest gratitude.

Her death raises genuine concerns, though many questions may never be fully answered. The former client who murdered her was later found dead nearby - an apparent suicide. The questions that remain are the same ones that echo throughout therapy offices everywhere: How safe are we? What happened in the moments leading up to this? What circumstances push a client toward such an extreme act? And what can our profession learn from these moments?

The Realities of Therapist Work Environments

One detail that stood out was the time of the incident: around 9 p.m. Therapists often work long days and evening hours because clients need support outside the 9–5 window. Many of us don’t have the privilege of choosing our schedule if we want to remain available to the people we serve.

Therapy is intended to support, guide, and offer healing. Yet therapy happens in vastly different environments: some clinicians travel the world while working remotely, some work in peaceful home studios or wellness spaces, and others search for un-housed clients on the streets, visit jails, sit in prison interview rooms, or work in agencies where “fit” isn’t a priority and you take the clients you’re given.

I did community mental health work early in my career, providing therapy across six counties, driving into rural communities and meeting families in their homes. I was a brand‑new social worker in my twenties, and I was terrified. At the time, I lived in a house surrounded by 100 acres of timber. In the snowy winters, I would drive a dark, unlit road back to a home lined with glass doors looking into the woods. I slept with a knife. I eventually left that job and later moved away; only then did I realize how unsafe and unprepared I had felt.

The Shift to Telehealth: A New Form of Safety

Years passed, and then the pandemic transformed the field. Telehealth exploded. Many therapists felt relief: we could see clients across state lines from the safety of our homes or offices. We had full caseloads, and clients were grateful simply to have support during a period of isolation, conflict at home, and fear. Virtual care helped us reach people more consistently, and it helped us feel safer.

Enter AI: A Rapidly Changing Clinical Landscape

Then came AI.

This is not to say AI is inherently harmful, none of us fully understand yet what its long‑term impact will be. States, licensing boards, and regulatory bodies are scrambling to keep up. Meanwhile, tech entrepreneurs and coaching empires have rapidly integrated AI into nearly every part of healthcare and mental health: billing, documentation, marketing, diagnostics, and even direct service delivery. Technology moves quickly; regulation does not. And our national leadership is in no rush to slow it down.

What does all of this mean? We are losing human connection. And that loss has consequences.

AI’s Expanding Role in Mental Health

Usage Trends (2023–2026)

22% of U.S. adults use AI therapy apps

Over 50% use ChatGPT for mental‑health support

85% of people with mental‑health conditions remain untreated

Chatbots show a 64% reduction in depressive symptoms compared to control groups

Abandonment rates remain 45%–60%

43% of mental‑health professionals now use AI tools

AI has undeniable strengths. It can offer 24/7 support, reduce wait times, and reach under-served populations. But virtual human therapists can do these things too, without sacrificing connection.

What We Know About AI’s Psychological Effects

Diagnostic Capability

Systematic reviews show AI can identify depression and anxiety with 75–90% accuracy using speech, online behavior, EEG, and interviews. But diagnostic accuracy does not guarantee safety, wisdom, or ethical judgment.

Dependence and AI‑Induced Symptoms

Research identifies:

parasocial or delusional attachments to AI

emotional dysregulation or dependency

social withdrawal

occasional worsening of symptoms

Loneliness and Problematic Use

A 2025 MIT study found:

higher chatbot use correlated with emotional dependence

heavy users reported worse psychosocial outcomes

Clinical Quality Concerns

JAMA Psychiatry warns AI may:

reduce access to human care

erode clinician skill

cause unpredictable harm due to probabilistic outputs

Risks to Vulnerable Groups

The APA reports:

lack of validation or safety guarantees

documented harms to adolescents and vulnerable populations

AI must not replace clinicians during crises

How Does AI Relate to the Murder of a Therapist?

The connection is not literal, but it is deeply cultural.

Both reflect a society in which:

people are increasingly isolated

mental‑health needs are growing

human connection is thinning

systems are overwhelmed

technology is expanding faster than our ethics

Violence against therapists does not occur in a vacuum. It emerges from:

untreated or under‑treated mental illness

loneliness and social fragmentation

reliance on digital replacements for human relationships

unrealistic expectations of instant healing

and strained clinical systems that leave both clients and therapists vulnerable

As AI reshapes the industry, the emotional labor of therapy increases while safety decreases. Clients who already feel unseen may misinterpret boundaries as rejection. Those who are destabilized may spiral without the grounding presence of another human being. And clinicians, increasingly burdened, may find themselves more exposed than ever.

We are at a crossroads.

The tragedy of losing a colleague and the rise of AI are not separate stories, they are part of the same cultural shift toward disconnection.

Conclusion

In the end, the murder of a therapist is not simply a personal tragedy, it s a reflection of the fractures running through our society, our mental‑health system, and our rapidly shifting technological landscape. Research shows that disconnection, loneliness, and untreated mental illness deepen risk, and the encroachment of AI into the spaces where human presence once lived complicates this even further. As therapy becomes increasingly digitized, we must confront how easily real relational care can be displaced, how safety can be compromised, and how quickly human needs can be overshadowed by efficiency or innovation.

This moment demands grief, yes, but also clarity. It calls us to examine how we protect clinicians, how we support vulnerable clients, how we regulate emerging technologies, and how we safeguard the irreplaceable role of human connection in healing. What happened to Rebecca White is part of a larger story, one that urges us to hold research, reflection, and advocacy together as we navigate the future of mental‑health care. If we are to move forward with integrity, we must insist that technology serve humanity, not replace it, and that the heart of therapy remains what it has always been: two humans in connection, working toward meaning, safety, and change.

References

All About AI. (2026). AI in therapy: 2023–2026 usage statistics and mental health trends. https://allaboutai.com

Koutsouleris, N., & Borgwardt, S. (2024). AI‑based diagnostic accuracy for depression and anxiety: A systematic review. Springer Link. https://link.springer.com

MIT Media Lab. (2025). Emotional dependence and psychosocial outcomes in long‑term chatbot users: A longitudinal study. https://www.media.mit.edu

JAMA Psychiatry. (2025). AI in mental health: Risks, predictive limitations, and clinical threats. JAMA Network. https://jamanetwork.com

American Psychological Association. (2025). APA health advisory on AI use in mental health care. https://www.apa.org

Smith, L. (2025). Minds in crisis: How the AI revolution is impacting mental health. Mental Health Journal. https://www.mentalhealthjournal.org/articles/minds-in-crisis-how-the-ai-revolution-is-impacting-mental-health.html

When Tragedy and Technology Intersect: Reflections on Therapist Safety, Culture, and the Rise of AI in Mental Health, article by Cynthia Djengue, LCSW, LISW, Psychotherapist